Chris Pollett >

Students >

Ayan

( Print View)

[Bio]

[Blog]

[Q-Learning for Vacuum World - PDF]

[Playing Atari with Deep Reinforcement Learning - PDF]

[The Arcade Learning Environment - PDF]

A simple Vacuum World implementation using Q-learning

Description: The popular "vacuum world" is a common introductory problem into the world of artificial intelligence. This is a search problem where our agent is a vacuum that must traverse a world (a grid, in our case) and locate and clean as many dirty cells as possible. Our implementation of vacuum world builds upon this classic problem and attempts to solve for k-many dirty squares (based on a custom policy) utilizing Q-learning. Q-learning is a form of reinforcement learning (where an agent learns about a world through the delivery of periodic rewards) that allows our agent to act on a quality-function, Q(s,a), that denotes the sum of rewards of from state s onwards if action a is taken. The goal of this deliverable to build such a q-function that can enable us to solve the vacuum world problem.

Files: [vacuum-world-q-learning.py]

Steps to run the file:

1. Download the file above.

2. Enter the folder where the file is located, on a CLI of your choice.

3. Run command "pip3 install numpy".

4. Run command "python3 vacuum-world-q-learning.py".

Experiment:

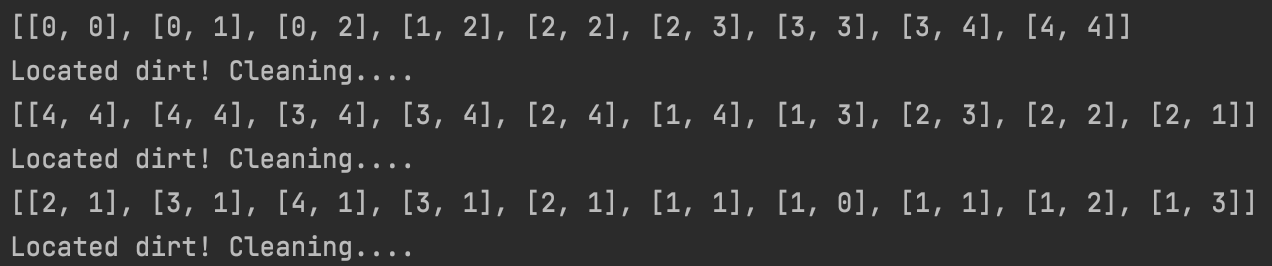

The following output was obtained after training on 5000 episodes.

Dirt is positioned at cells: [4,4], [2,1] and [1,3]

Observations:

We observe that the agent is able to locate the dirt successfully at the appropriate cells without making too many errors. However, there is a slight loss in accuracy when moving from one clean action to searching for the next dirty cell. Ultimately, the results are promising for our first-pass implementation of vacuum world and we expect to improve upon these as we delve into deep q-learning and enhancing our lookup-table into a neural net as part of the second deliverable.