Chris Pollett > Students > Dhole

Print View

[Bio]

[Blog]

[Deliverable #1: Naive Bayes Classifier]

[Hierarchical Agglomerative Clustering - PDF]

[Deliverable #2: Hierarchical Agglomerative Clustering]

[Deliverable #3: Classifiers and Clustering in Yioop]

[Deliverable #4: Recipe plugin scale out]

Hierarchical Agglomerative Clustering Proof of Concept On Sample Documents Set

Aim

To understand and implement Hierarchical Agglomerative Clustering Algorithm in Machine Learning

Fork me on GitHub OR Source Code - IPython Notebook

Introduction

In data mining, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis which seeks to build a hierarchy of clusters.

Strategies for hierarchical clustering generally fall into two types:

Agglomerative: This is a "bottom up" approach: each observation starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy.

Divisive: This is a "top down" approach: all observations start in one cluster, and splits are performed recursively as one moves down the hierarchy.

Description

My primary task was to understand the Hierarchical Agglomerative Clustering Algorithm in Machine Learning and apply it on the corpus of sample documents

The idea was to form clusters of similar documents and keep going upwards i.e. to form more clusters of created clusters until we reach to only one cluster.

Algorithm

-Given n points p1, p2, … pn; We assume all as different clusters c1, c2, c3,.... cn

num = #n

while (num > 1):

find the pair of closest distance points pi,pj i.e. ci,cj

form a new cluster ci:j = ci + cj

remove ci and cj

Vectorization

In order to compare the documents on same standard, I used Vectorization of the documents, which is based on Bag of Words model.

For example, doc1 = "this is cat", doc2 = "this is hat"

bag of words = [this, is, cat, hat]

vector1 = [1 1 1 0]

vector2 = [1 1 0 1]

SourceCode

Documents' Similarity Measure

After having the documents in form of vectors, their similarity is measured based on COSINE SIMILARITY concept.

For example, for the given vectors V1 and V2, we have

cosine_similarity = (V1 dot-product V2) / (|V1| * |V2|)

Closer the value of cosine_similarity to 1, more similar are the documents representing the relevant vectors

SourceCode

Input

corpus = ["this is dog this is cat", "this is cat", "that is different", "he is different", "they are happy"]

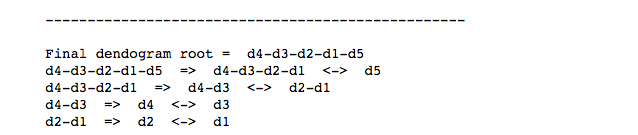

Output Dendogram

A Note On IPython

It would be very convenient if IPython Notbook is used to view and execute the code.

Once IPython Notebook is installed and running, you just need to open the SourceCode File in the Notebook, and try out things directly in the browser easily.

Fork me on GitHub OR Source Code - IPython Notebook