Chris Pollett >

Students >

Kajale

( Print View)

[Bio]

[Blog]

[Depth Wise Convolutional Model-PDF]

Deliverable 4

Visual and Lingual Emotional Recognition using Deep Learning Techniques

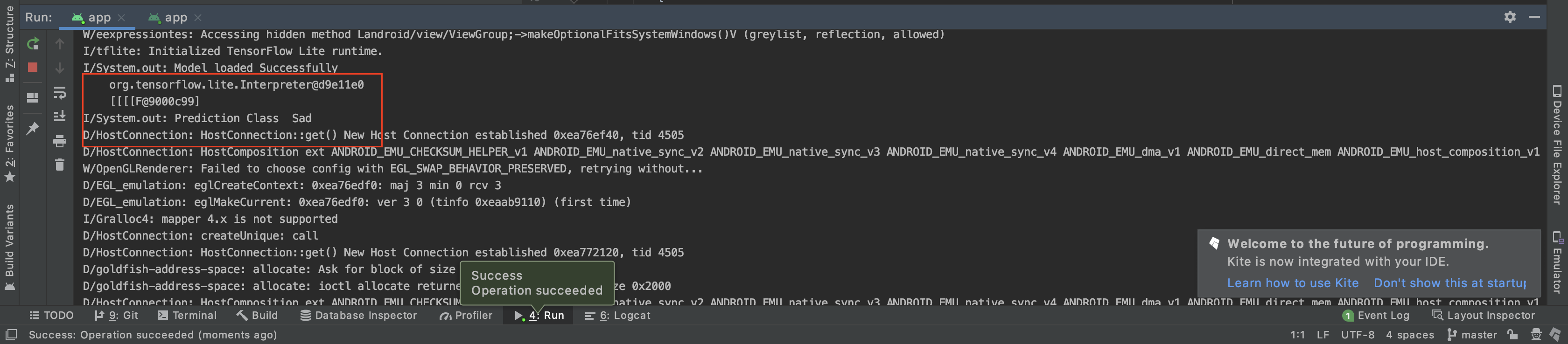

We deployed the model which was trained and tested in Deliverable 1 on the android application. For the initial testing, we tried to test it on static images. Whenever the application starts, the model is loaded on the creation of the instance of the application. The images are reshaped in (1, 48, 48, 1) dimension and passed it to the model. As the last layer is Softmax, the model returns the array of probability of 7 classes (Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral). The class having maximum probability is returned as the result. This model will be further enhanced and deployed with functionality of recognizing emotions based on speech as well.

Code Snippet for Prediction

-

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

if (null == savedInstanceState) {

getSupportFragmentManager().beginTransaction()

.replace(R.id.container, FirstFragment.getFragment())

.commit();

}

try {

tflite = new Interpreter(loadModelFile());

System.out.println("Model loaded Successfully");

System.out.println(tflite);

}catch (Exception ex){

ex.printStackTrace();

}

float [][][][] resizedarray = new float[1][48][48][1];

int index = 0;

for(int i = 0 ; I < 48 ; i++) {

for(int j = 0 ; j < 48 ; j++){

resizedarray[0][i][j][0] = image[index++];

}

}

float[][] prediction = new float[1][7];

tflite.run(resizedarray,prediction);

int maxIndex = 0;

float max = prediction[0][0];

for(int i = 0 ; I < prediction[0].length ; i++)

{

if(prediction[0][i]>max)

{

max = prediction[0][I];

maxIndex = I;

}

}

System.out.println("Prediction Class\t" +emotions[maxIndex]);

} - image [] represents an array containing 2304 values. Each value represents single pixel of image flattened in one dimension. Each image is of dimension 48x48. OnCreate() function is called whenever the application starts. After starting the application, it creates an object of Interpreter in which tflite model is stored. tflite.run(input, output) method runs the model on the input which is provided. It returns the array of probabilities that the image belongs to which class. Highest class is selected based on the probability.

Code Snippet for loading the model

private MappedByteBuffer loadModelFile() throws IOException {

AssetFileDescriptor fileDescriptor=this.getAssets().openFd("sequential.tflite");

FileInputStream inputStream=new FileInputStream(fileDescriptor.getFileDescriptor());

FileChannel fileChannel=inputStream.getChannel();

long startOffset=fileDescriptor.getStartOffset();

long declareLength=fileDescriptor.getDeclaredLength();

return fileChannel.map(FileChannel.MapMode.READ_ONLY,startOffset,declareLength);

}Predicted Output