Tensorboard, GANs

CS256

Chris Pollett

Dec 1 2021

CS256

Chris Pollett

Dec 1 2021

import keras

import tensorflow as tf

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout

from tensorflow.keras.optimizers import RMSprop

import numpy as np

import os

import time

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

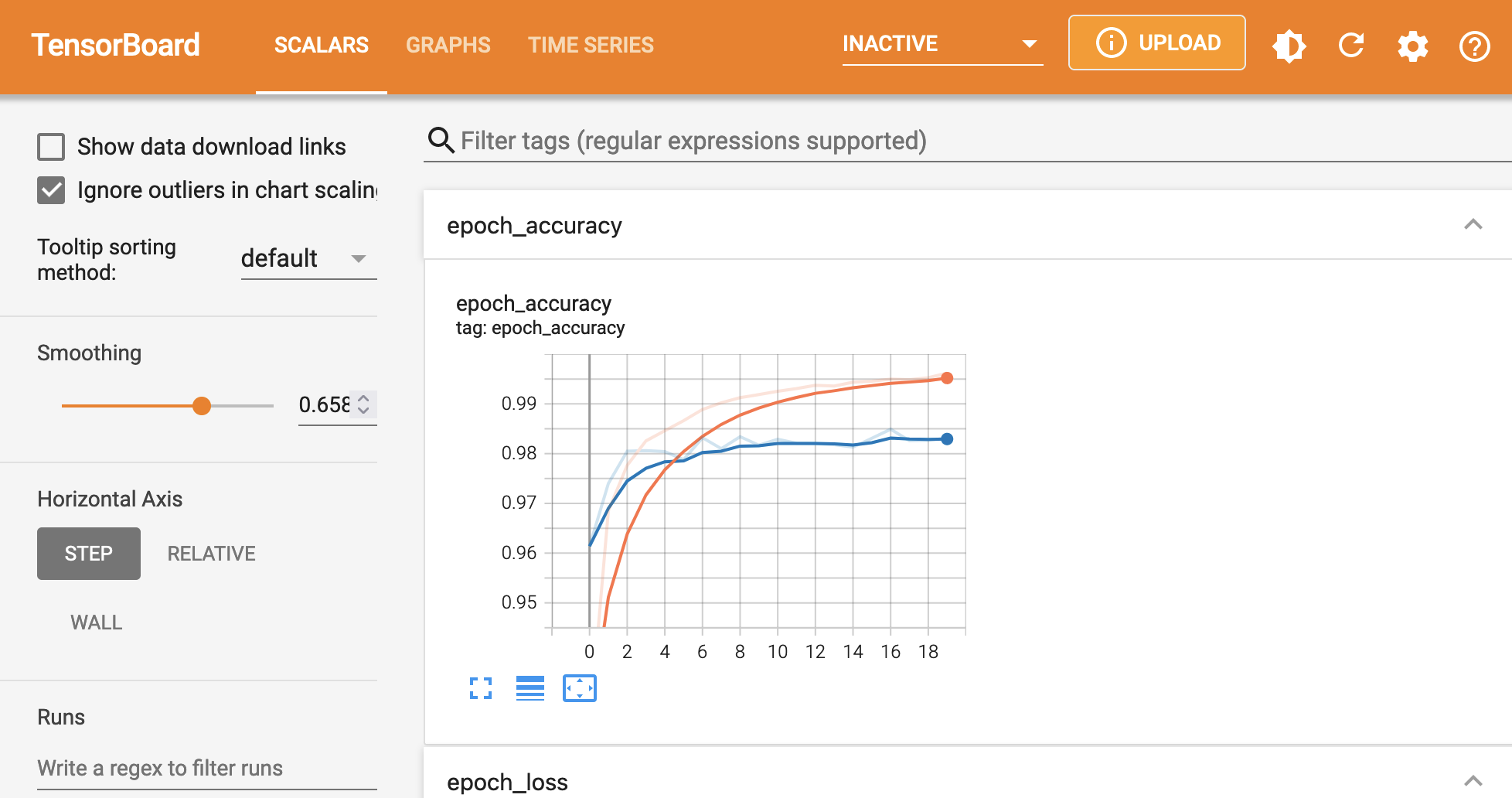

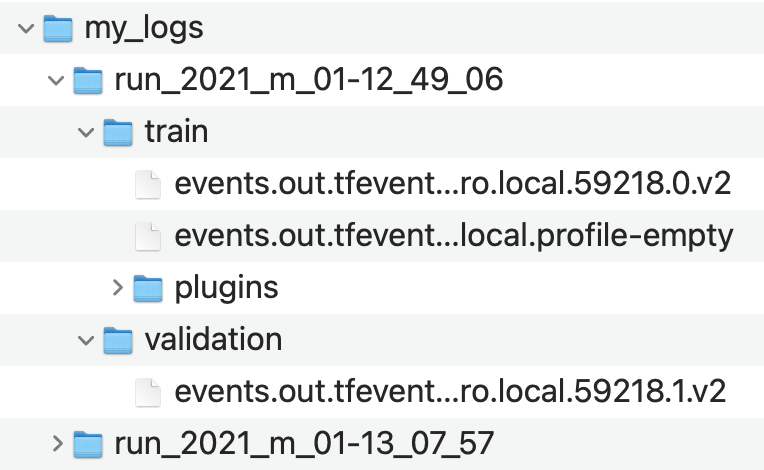

#top level directory to log output of training

root_logdir = os.path.join(os.curdir, "my_logs")

#function to compute a path to a sub folder to hold

#logs for a given run

def per_run_logdir():

run_id = time.strftime("run_%Y_m_%d-%H_%M_%S")

return os.path.join(root_logdir, run_id)

run_logdir = per_run_logdir()

#create a new callback that will do logging

my_tensorboard_callback = keras.callbacks.TensorBoard(run_logdir)

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# xtrain is originally 28x28 grayscale uint8's between 0-255

x_train = x_train.reshape(60000, 784)

x_test = x_test.reshape(10000, 784)

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

y_train = tf.keras.utils.to_categorical(y_train, 10)

y_test = tf.keras.utils.to_categorical(y_test, 10)

model = Sequential()

model.add(Dense(512, activation='relu', input_shape = (784,)))

model.add(Dropout(0.2))

model.add(Dense(512, activation = 'relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy',

optimizer = RMSprop(),

metrics = ['accuracy'])

history = model.fit(x_train, y_train,

batch_size = 128, epochs = 20, verbose = 1,

validation_data = (x_test, y_test),

callbacks=[my_tensorboard_callback] #tell fit to use callback defined above

)

tensorboard --logdir=./my_logs --port=8080