Set Cover Aprpoximation, Randomized Approximation Algorithms

CS255

Chris Pollett

May 9, 2022

CS255

Chris Pollett

May 9, 2022

One can give a greedy algorithm for finding a cover by picking the set `S` at each stage that covers the greatest number of remaining elements that are uncovered.

GREEDY-SET-COVER(X, F)

1 U := X

2 C := ∅

3 while U ≠ 0

4 select an S ∈ F that maximizes {S ∩ U}

5 U := U - S

6 C := C ∪ {S}

7 return C

Let `H(d) = sum_(i=1)^d 1/i` denote the `d`th harmonic number, defining `H(0) = 0`.

Theorem. GREEDY-SET-COVER is a polynomial-time `r(n)`-approximation algorithm, where

`r(n) = H(max{|S| : S in F})` on instances `(X,F)` or size `n`.

Proof. GREEDY-SET-COVER deletes at least one `S` from `F` in each iteration and the select step is at most quadratic time, so the algorithm will be polynomial time in the instance size.

To see that GREEDY-SET-COVER is an `r(n)`-approximation algorithm, we assign a cost of `1` to each set selected by the algorithm, distribute this cost over the elements covered for the first time, and then use these costs to derive the desired relationship between the size of an optimal set cover `C^star` and the size the cover `C` returned by the algorithm...

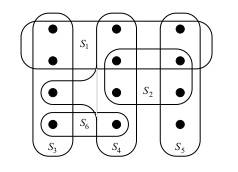

Let `S_i` denote the `i`th set selected by GREEDY-SET-COVER. We spread the cost of selecting `S_i`, 1, evenly among the elements

covered for the first time by `S_i`. Let `c_x` denote the cost allocated to element `x in X`. If `x` is covered by `S_i`, then

`c_x = 1/(|S_i - (S_1 cup S_2 cup ... cup S_(i-1))|)`.

At each step of the algorithm, 1 unit of cost is assigned, and so

`|C| = sum_(x in X)c_x`.

The cost of the optimal cover is

`sum_(S in C^star)sum_(x in S)c_x`,

and as each `x in X` is in at least one `S in C^star`, we have

`sum_(S in C^star)sum_(x in S)c_x ge sum_(x in X)c_x = |C|` (**).

We will show the theorem follows from the following claim:

Claim.`sum_(x in X)c_x le H(|S|)` for all `S in F`.

(Proof of Theorem from Claim). From (**) and the claim, we have

`|C| le sum_(S in C^star) H(|S|)`

`le |C^star| cdot H(max{|S| : S in F})`

Consider any `S in F` and `i = 1, ..., |C|`. Let

`u_i = |S - (S_1 cup S_2 cup ... cup S_(i))|`.

We define `u_0 = |S|`. Let `k` be the least index such that `u_k = 0`.

At `k`, each element in S will be covered by at least one of `S_1, ... S_k`.

We have `u_(i-1) ge u_i`, and that `u_(i-1) - u_i` elements of

`S` are covered for the first time by `S_i`. Thus,

`sum_(x in S)c_x = sum_(i = 1)^k(u_(i-1) - u_i) cdot 1/(|S - (S_1 cup S_2 cup ... cup S_(i -1))|)`

Observe that

`|S_i - (S_1 cup S_2 cup ... cup S_(i - 1))| ge |S - (S_1 cup S_2 cup ... cup S_(i - 1))| = u_(i - 1)`

because we chose `S_i` greedily. This gives

`sum_(x in S)c_x le sum_(i = 1)^k(u_(i-1) - u_i) cdot 1/(u_(i-1))`

`= sum_(i=1)^k sum_(j = u_i + 1)^(u_(i-1))1/(u_(i-1))`

`le sum_(i=1)^k sum_(j = u_i + 1)^(u_(i-1)) 1/j` (because of the start condition of sum, `j le u_(i-1)`)

`= sum_(i=1)^k ( sum_(j = 1)^(u_(i-1)) 1/j - sum_(j = 1)^(u_(i)) 1/j)`

`= sum_(i=1)^k (H(u_(i-1)) - H(u_i))`

`= H(u_0) - H(u_k)` (telescoping series)

`= H(u_0) - H(0)`

`= H(u_0)`

`=H(|S|)`, proving the claim.

Theorem. Suppose that the random walk algorithm with `r=2n^2` is applied to any satisfiable instance of 2SAT with `n` variables. Then the probability that a satisfying truth assignment will be discovered is at least `1/2`.

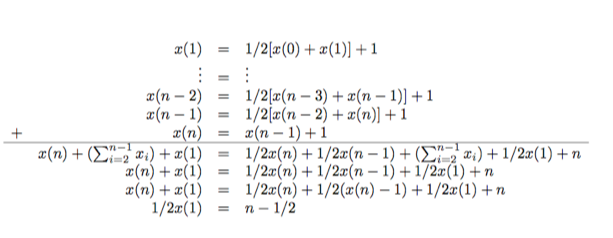

Proof. Let `T` be a truth assignment which satisfies the given 2SAT instance `I`. Let `t(i)` denote the number of expected repetitions of the flip step until a satisfying assignment is found starting from an assignment `T'` which differs in at most `i` positions from `T`. Notice:

The worst case is the when relation `t` of (3) and (4) hold as equations. `x(0)=0`; `x(n)=x(n-1)+1`; `x(i) = 1/2(x(i-1)+x(i+1))+1`

Which of the following statements is true?

Theorem. Given an instance of MAX-3SAT with n variables and `m` clauses, the randomized algorithm that independently sets each variable to `1` with probability `1/2` and to `0` with probability `1/2` is an randomized `8/7`-approximation algorithm.

Proof. Define the indicator random variable `Y_i = I{`clause `i` is satisfied`}`. Since no literal appears more than once in the same clause, and since we assume that no variable and its negation appear in the same clause, the settings of the three literals are independent. A clause is not satisfied only if all three of its literals are set to `0`. We thus have:

Let `Y = sum_i Y_i`. Then

`E[Y] = E[sum_i Y_i] = sum_iE[Y_i] = sum_i 7/8 = (7m)/8`.

As `m` is an upper bound on the number of possible clauses that could be satisfied,

this gives the result.