Deliverable 1

Visual and Lingual Emotional Recognition using Deep Learning Techniques

We designed a sequential and depth wise convolutional model for facial expression recognition. This model will be used to recognize the facial expressions and predict emotions. The model will be deployed on Android application for further testing. The motive behind this deliverable was to experiment with different architectures and select the better one for emotion recognition based on facial expression.

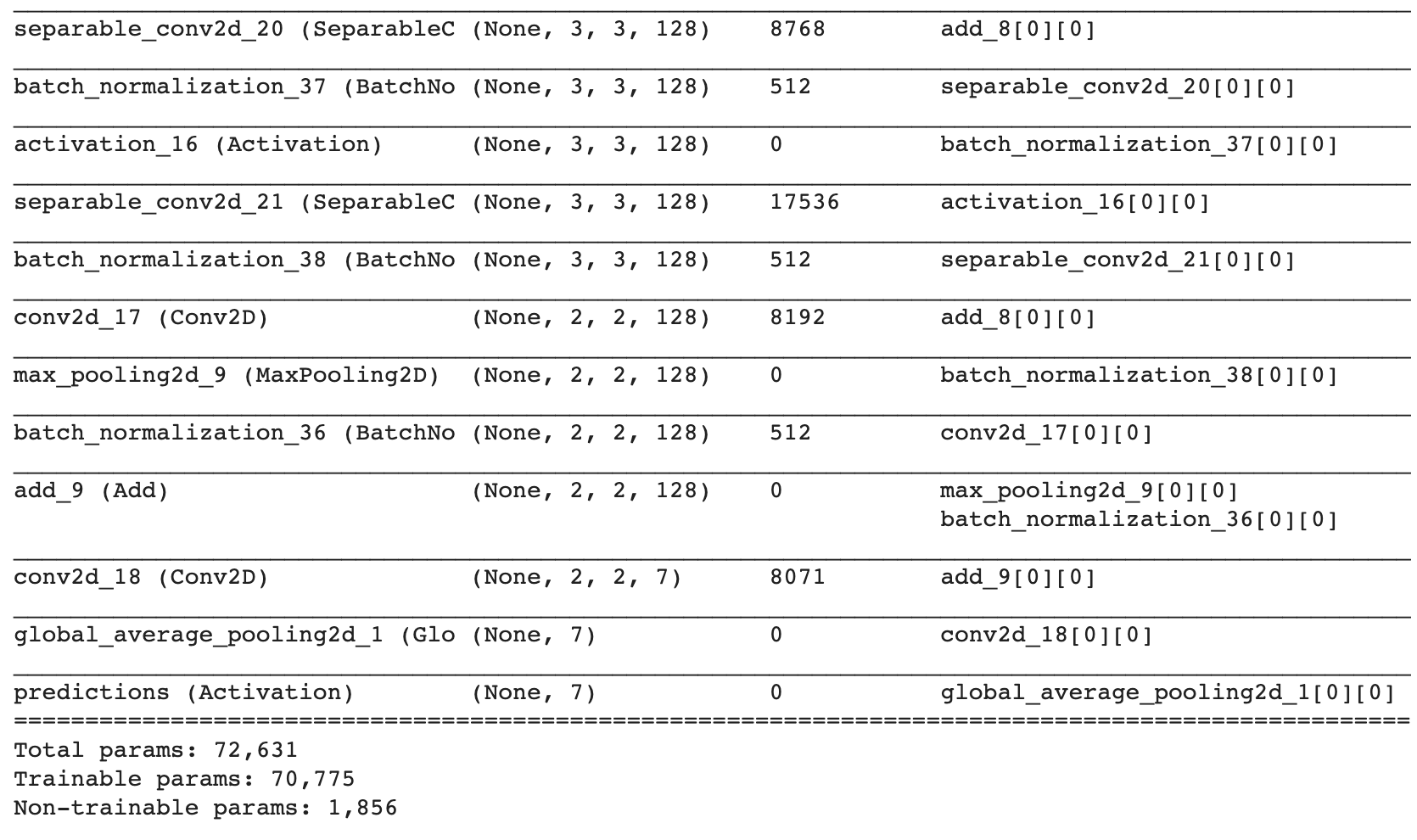

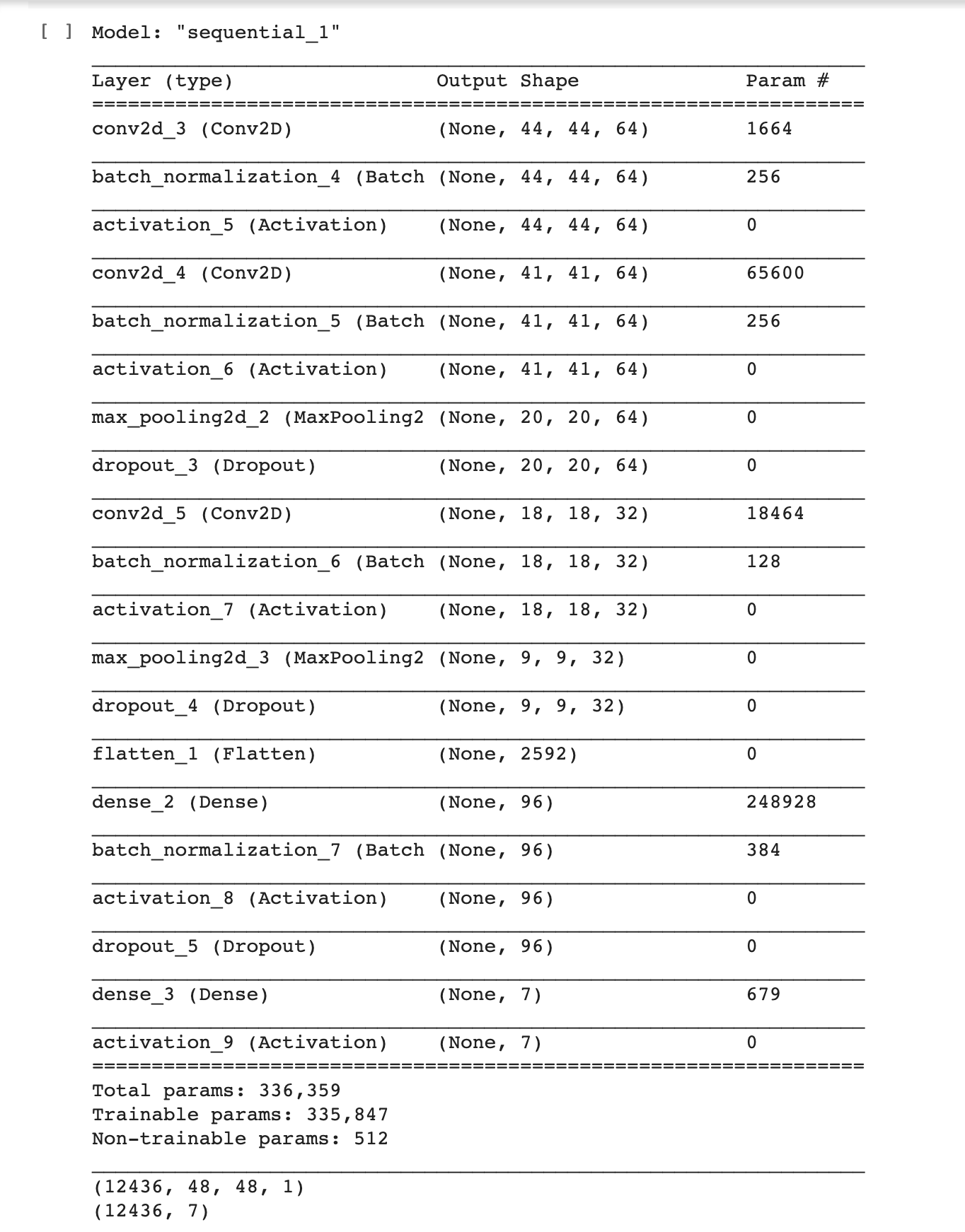

Sequential Model

- Designed a Sequential Convolutional Neural Network for facial Expression recognition.

- Trained and Tested it on FerNet2013 Dataset with 7 classes: Angry, Disgust, Fear, Happy, Sad, Surprise and Neutral.

- 84.22% Training accuracy for 40 epochs.

- 64.62% Validation accuracy for 40 epochs.

- Highest individual emotion accuracy: 81.90% (Happy)

- Lowest individual emotion accuracy: 41.50% (Fear)

- Download Source code

Model Architecture

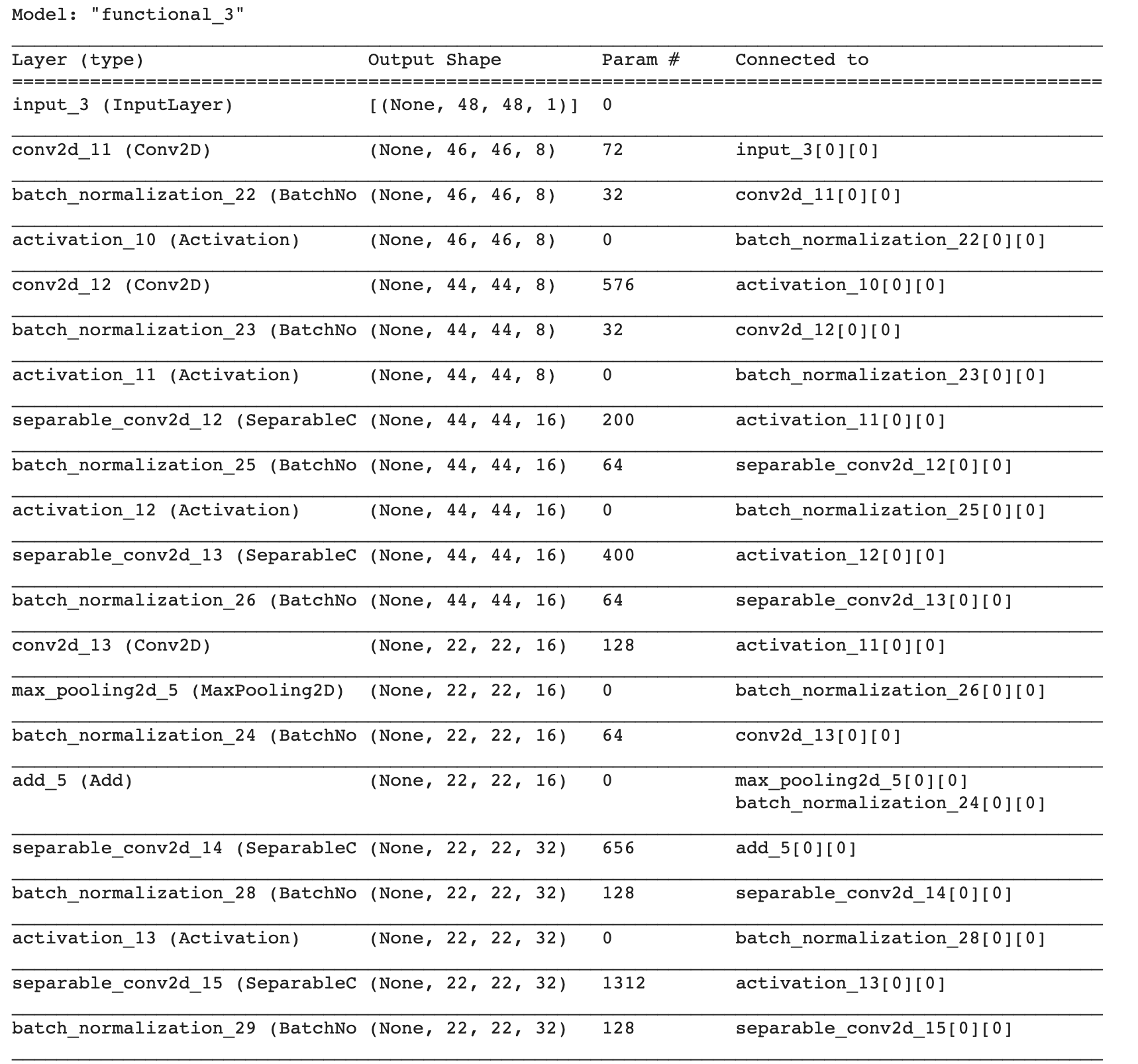

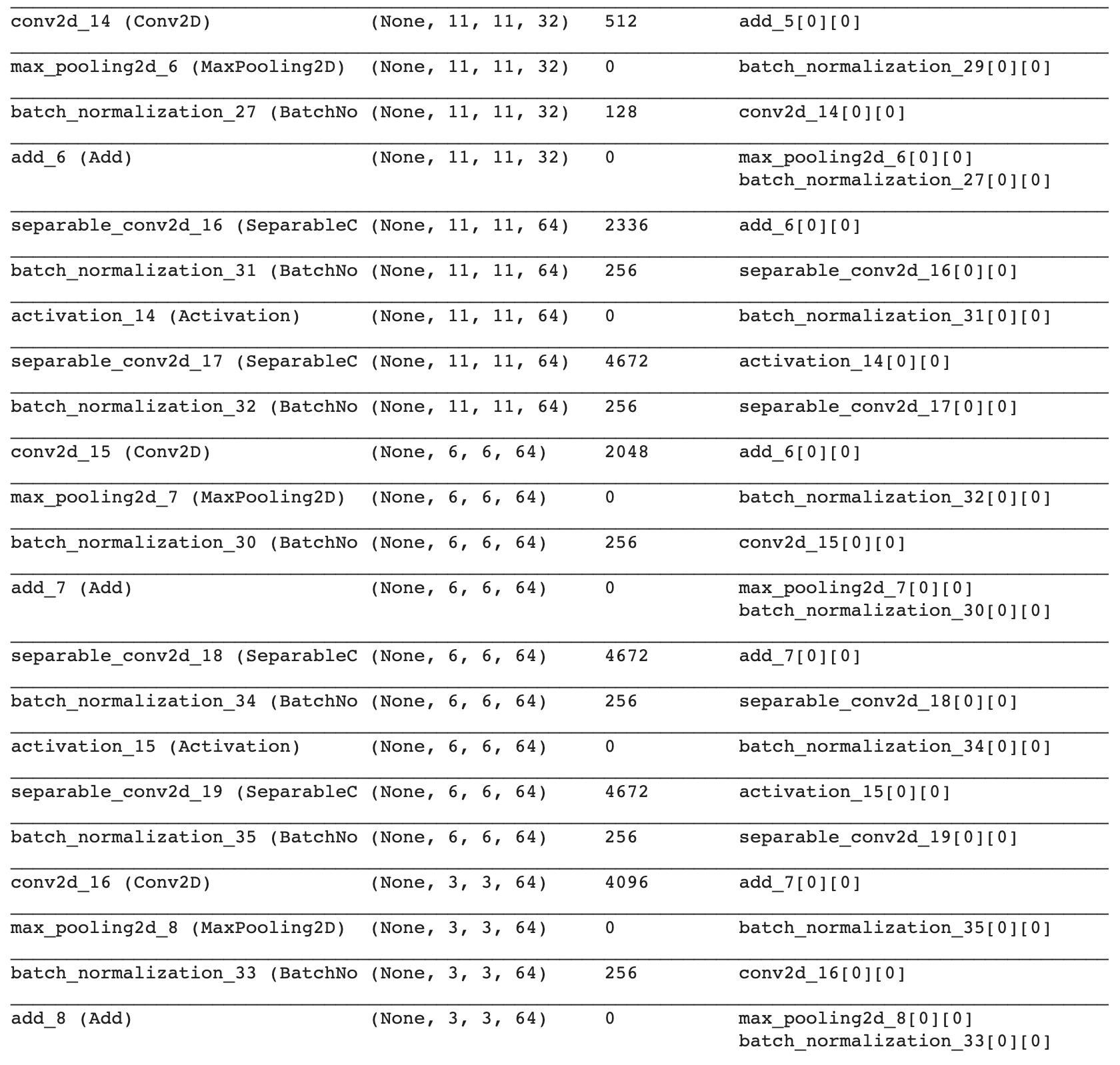

Depth wise convolutional model

- Designed a Depth wise Convolutional Neural Network for facial Expression recognition.

- Trained and Tested it on FerNet2013 Dataset with 7 classes: Angry, Disgust, Fear, Happy, Sad, Surprise and Neutral.

- 67.75% Training accuracy for 100 epochs.

- 60.95% Validation accuracy for 100 epochs.

- Highest Individual Emotion Accuracy: 83.37% (Happy).

- Lowest Individual Emotion Accuracy: 35.74% (Fear).

- Download Source Code

Model Architecture

Based on accuracy and the size of the model due to limited mobile CPU resources, depth wise convolutional model was finalized for further testing.

Dataset

The data consists of 48x48 pixel grayscale images of faces. The faces have been automatically registered so that the face is more or less centered and occupies about the same amount of space in each image. The task is to categorize each face based on the emotion shown in the facial expression in to one of seven categories (0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral).