Create a GAN-based framework to generate fake videos with input from a single picture

Description:

Use a single frame as input and output a video with 16 frames. LSTM layer is used to learn the temporal information. Then it follows with a 3D generator but only learn the spatial features.

Dataset:

UCF101-Action Recognition dataset and MPII Cooking Activities dataset

Dependencies:

1) numpy

2) keras

3) matplotlib

4) skimage

5) skvideo

LSTM GAN:

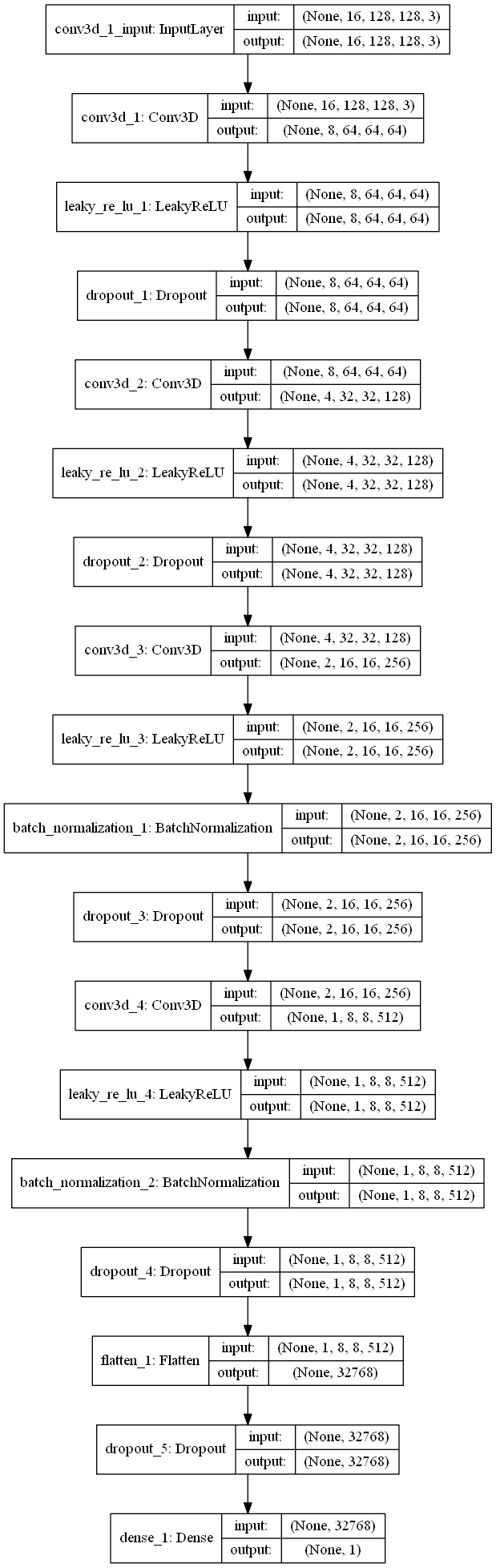

Plot of the Discriminator Model:

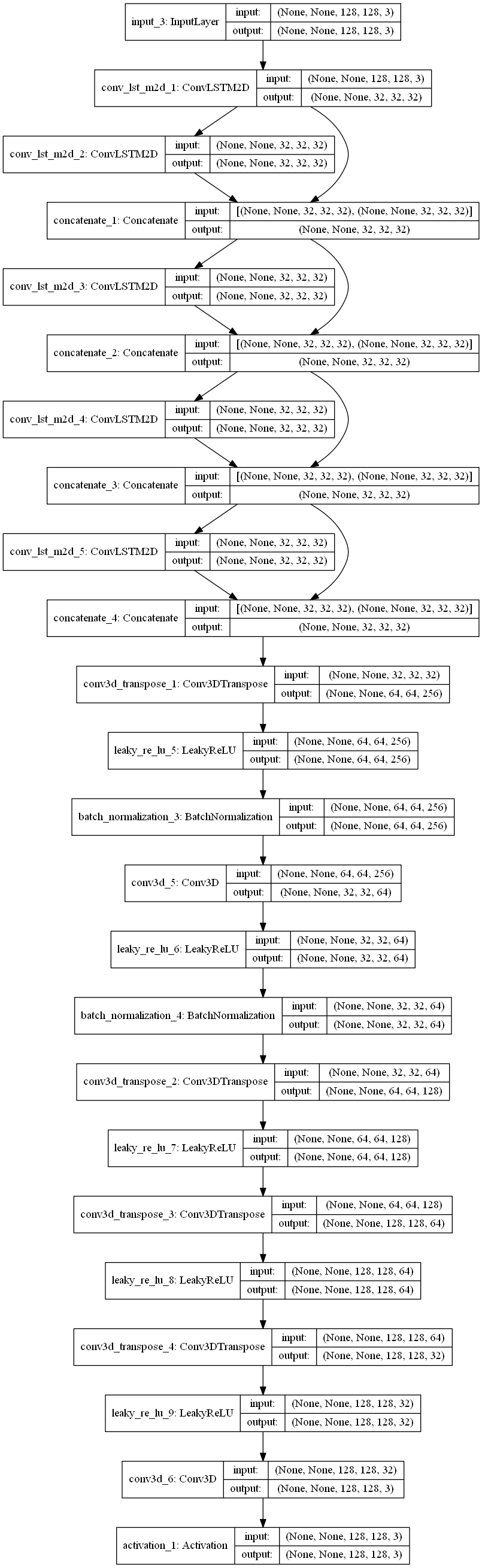

2) Generator - LSTM and 3D GAN.

Plot of the Generator Model:

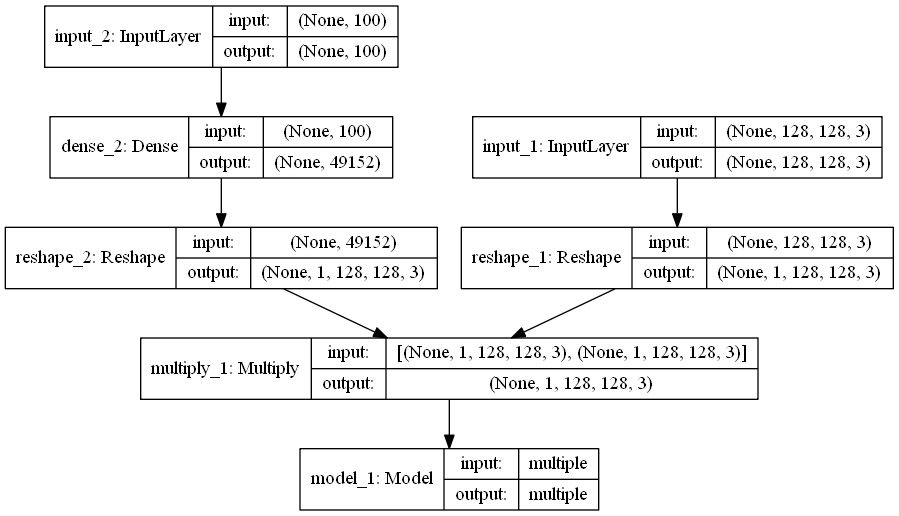

3) Combined generator for input data.

4) Use dropout rate 0.5.

Download lstm_gan.py

Output:

128 X 128 resolution video with 16 frames

Download predicted video with 3dgan and a single image as input

Download predicted video with lstm gan

Conclusion:

1) Combine noise and one input image to generate video

2) The model gets unstable when the model is too complex

3) 2D + 1D has better result compare to pure 3D video GAN