More Index Construction

CS267

Chris Pollett

Oct. 10, 2012

CS267

Chris Pollett

Oct. 10, 2012

Exercise 4.1. Suppose the posting list for some term consists of 64million 4byte postings. To carry out a random access of this posting list, the search engine performs two disk read operations: (1) Loads the per-term index into RAM; (2) Loads a block B of postings into RAM which was identified by binary search of synchronization points. Let granularity (Gran) be the number of postings/sync point. What is the optimal granularity for this situation? What is the total number of bytes read from disk?

Let `NumSync =` be the total number of sync points `= (64 times 10^6)/(Gran)`.

Let `BIO = ` bytes per I/O ; let `TIO = ` total number of I/Os.

So `TIO = (4 times NumSync)/(BIO) + (4 times Gran) /(BIO) = (256 times 10^6)/(Gran times BIO) + (4 times Gran) /(BIO)`.

To minimize, we differentiate with respect to `Gran`, giving:

`frac(d TIO)(d Gran) = frac(d)(d Gran)((256 times 10^6)/(Gran times BIO)) + frac(d)(d Gran)((4 times Gran) /(BIO))`

`= -((256 times 10^6)/(Gran^2 times BIO)) + 4/(BIO) = 0`

Solving this gives `Gran = 8000` postings. So `NumSync = 8000` synchronization points. Assuming `4`KB blocks sizes, reading `B` would take `8` I/Os, and reading all the synchronization records would also take `8` I/Os for a total of `16` I/Os.

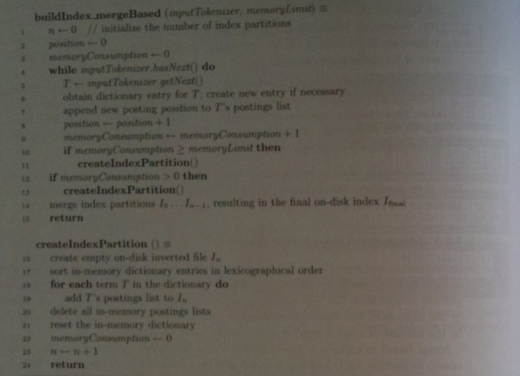

buildIndex_sortBased(inputTokenizer)

{

position := 0;

while (inputTokenizer.hasNext()) {

T := inputTokenizer.getNext();

obtain dictionary entry for T, create new entry if necessary;

termID := unique termID of T;

write record R[position] := (termID, position) to disk;

position++;

}

tokenCount := position;

sort R[0], .., R[tokenCount-1] by first component; break ties with second component;

perform a sequential scan of R[0], .., R[tokenCount-1] creating the final index;

return;

}