Map Reduce and Page Rank

CS267

Chris Pollett

Dec 5, 2012

CS267

Chris Pollett

Dec 5, 2012

Let epsilon be the constant we used to decide if we stop

Compute initial current_r,

do {

Store in distributed file system (DFS) current_r as old_r

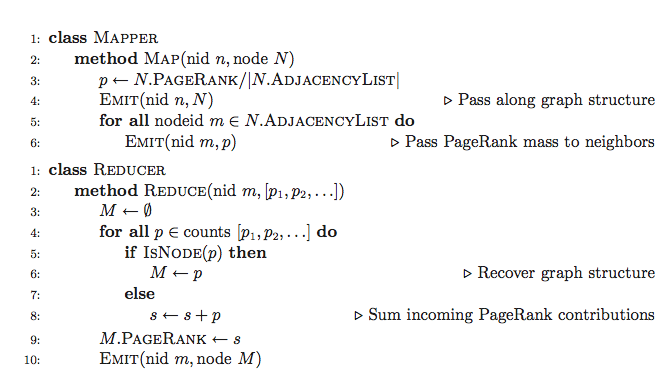

Do map reduce job to compute A*current_r

where A is the normalized adjacency matrix

Store result in DFS as current_r.

Do map reduce job to compute dangling node correction to current_r

Store result in DFS as current_r.

Do map reduce job to compute teleporter correction to current_r

Store result in DFS as current_r.

Do map reduce job to compute len = || current_r - old_r||

} while(len > epsilon)

output current_r as page ranks

Exercise 14.6. (a) Design a MapReduce that computes the average document length in a corpus of text.

Answer. The average document length is the total number of tokens divided by the total number of documents. Let our initial key value pairs be (docid, document). Our mapper will produce key value pairs of the form ("doc_count", 1) and ("token_count", 1) according to the following (we assume or arrange both keys go to same reducer):

map(docid, document) {

output("doc_count", 1);

for each token t in document {

output ("token_count", 1);

}

return;

}

The reducer then adds these pairs as follows:

reduce(key, (v[1],... v[n])) { // remark v[i] will always be 1

static total_docs = 0;

static total_tokens = 0;

for i = 1 to n {

if(key == "doc_count") {

total_docs += v[i];

}

if(key == "token_count") {

total_tokens += v[i];

}

}

if(total_docs > 0) {

return total_tokens/total_docs;

}

return -1;

}

We assume if we see -1 we should wait for the second return value of the function

(b) Revise your reduce function so that it can be used as a combiner.

Answer. To make a combiner we should output ("doc_count", total_count) and ("token_count", total_tokens) in addition to the average. We assume things output from the combiner are sent to the reducer, and that the return value is "local" to the machine.