Map Reduce, Page Rank, Hadoop

CS267

Chris Pollett

Dec 5, 2018

CS267

Chris Pollett

Dec 5, 2018

Let epsilon be the constant we used to decide if we stop

Compute initial list of node objects, each with a page_rank field and an adjacency list. This whole list

we'll call current_r and slightly abuse notation to view it as a vector to which

our matrices are applied

do {

Store in distributed file system (DFS) pairs (nid, node) as (old_nid, old_node)

where node is a node object (containing info about a web page)

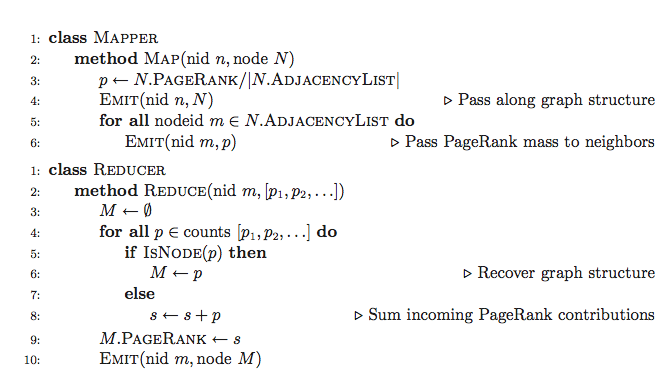

Do map reduce job to compute A*current_r

where A is the normalized adjacency matrix

Store result in DFS as pairs (nid, node) where node has its page_rank field set to the

value given by the above operation.

Do map reduce job to compute dangling node correction to current_r

Store result in DFS as (nid, node) where where node has its page_rank field set to the

value given by the above operation.

Do map reduce job to compute teleporter correction to current_r

Store result in DFS as (nid, node) where where node has its page_rank field set to the

value given by the above operation.

Send all pairs (nid, node) in DFS computed above to reduce job which

computes (nid, node) in which node has a page_rank equal to the sum of the three

page_ranks that one would have grouping by nid.

Store result in DFS as pairs (nid, node).

Do map reduce job to compute len = || current_r - old_r||

} while(len > epsilon)

output nodes with their page ranks

Suppose the web consisted of four web pages, 1, 2, 3, and 4. Page 1 and 2 each linked to each other, Page 2 is also linked to Page 3, and Page 4 is by itself. There are no other links. Write down the normalized adjacency matrix A. Write down the dangling node correction matrix D. Write down the teleporter matrix H.

Start with the vector `\vec r = (1/4, 1/4, 1/4, 1/4)^T`. Then one round of the page rank algorithm corresponds to computing:

`((1 - \alpha)(A+D)+ \alpha H)\cdot \vec r = (1-\alpha) A\vec r + (1 -alpha)D\vec r + \alpha H\vec r`.

Let `\alpha = 0.2`. Compute the three summands of the right hand side above separately showing work. For each summand, observe how hard it is to compute an entry (as function of the dimension of `r`). When done, add the summands. Post your work to the Dec 5 Discussion Thread.

brew install hadoopto install Hadoop on my laptop.

hadoop some-command argsFor example, if some command is jar then it runs the map-reduce job given by the arguments.

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.*;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount

{

/* This inner class is used for our Map job

First two params to Mapper are types of KeyIn ValueIn

last two are types of KeyOut, ValueOut

*/

public static class WCMapper extends Mapper<Object,Text,Text,IntWritable>

{

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException

{

// normalize document case, get rid of non word chars

String document = value.toString().toLowerCase()

.replaceAll("[^a-z\\s]", "");

String[] words = document.split(" ");

for (String word : words) {

Text textWord = new Text(word);

IntWritable one = new IntWritable(1);

context.write(textWord, one);

}

}

}

/* This inner class is used for our Reducer job

First two params to Reducer are types of KeyIn ValueIn

last two are types of KeyOut, ValueOut

*/

public static class WCReducer extends Reducer<Text, IntWritable,

Text, IntWritable>

{

public void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException

{

int sum = 0;

IntWritable result = new IntWritable();

for (IntWritable val: values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main (String[] args) throws Exception

{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "wordcount");

job.setJarByClass(WordCount.class);

job.setMapperClass(WCMapper.class);

job.setReducerClass(WCReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

TextInputFormat.addInputPath(job, new Path(args[0]));

Path outputDir = new Path(args[1]);

FileOutputFormat.setOutputPath(job, outputDir);

FileSystem fs = FileSystem.get(conf);

fs.delete(outputDir, true);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

javac -classpath `yarn classpath` -d . WordCount.java

jar -cf WordCount.jar WordCount.class 'WordCount$WCMapper.class' 'WordCount$WCReducer.class'

hadoop jar WordCount.jar WordCount /Users/cpollett/tmp.txt /Users/cpollett/output

hadoop fs -ls /Users/cpollett/output/

hadoop fs -cat /Users/cpollett/output/part-r-00000

2018-12-05 11:28:01,201 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 1 foo 2 la 3