Approximation Algorithms

CS255

Chris Pollett

Apr 30, 2018

CS255

Chris Pollett

Apr 30, 2018

APPROX-VERTEX-COVER(G)

1 C=∅

2 E'= E[G]

3 while E' ≠ ∅

4 let {u, v} be an arbitrary edge of E'

5 C = C ∪ {u, v}

6 Remove from E' every edge incident with either u or v

7 return C.

Theorem. APPROX-VERTEX-COVER is a p-time 2-approximation algorithm.

Proof. First, the algorithm runs in time `O(|V| +|E|)`, as we delete two vertices and at least one edge each time through the loop.

The set `C` returned by the algorithm is a vertex cover, since each edge that is removed is covered by some vertex in `C`. And the loop continues till no edges left.

To see that the cover returned is at most twice the optimal, let `A` denote the set of edges which were picked in line 4. In order to cover the edges in `A`, any vertex cover (including the optimal `C^star`) must include at least one endpoint of each edge in `A`. No two edges in `A` share an endpoint, so no two edges from `A` are covered by the same vertex from `C^star`. So `|C^star | ge |A|`. On the other hand `|C| = 2|A|`.

Which of the following statements is true?

APPROX-TSP-TOUR(G, c) 1. Select a vertex r to be a root vertex 2. Compute the minimal spanning tree for G from root r using Prim's algorithm 3. Let L be the list of vertices visited in a pre-order tree walk of T 4. return the Hamiltonian cycle H that visits the vertices in order L.

MST-PRIM(G, w, r) // r is a starting node to grow the tree from 01 for each u in G.V 02 u.key = infty 03 u.pi = NIL 04 r.key = 0 05 r.pi = 0; 06 Q = MAKE-QUEUE(G.V) //will have all vertices 07 while Q != 0 08 u = EXTRACT-MIN(Q) 09 for each v in G.adj[u] 10 if v in Q and u.key + w(u, v) < v.key 11 v.pi = u 12 v.key = u.key + w(u,v) //call appropriate DECREASE-KEY

Theorem. APPROX-TSP-TOUR is a p-time 2-approximation algorithm for TSP with triangle-inequality holding on the cost function.

Proof. The minimal spanning tree algorithm runs in time `O(|V|^2)`. The remaining step take at most `O(|G|)` time.

Let `H^star` denote the optimal tour of the vertices. Since we can obtain a spanning tree from any tour by deleting an edge, we have `c(T) le c(H^star)` where `T` is our minimal spanning tree. A full walk `F` of `T` lists the vertices when they are first visited and also whenever they are returned to after a visit to a subtree. So `c(F) = 2c(T) le 2c(H^star)`. A full walk is typically not a tour since it lists some vertices twice.

On the other, the `H` returned by the algorithm is a tour and satisfies `c(H) le c(F)`, since it is obtained by deleting vertices from the full walk and since the triangle inequality holds. We are using the triangle inequality as if we have a sequence `a b c` in the full walk and delete `b`, in our tour we want that the cost does not rise.

Theorem. If `P ne NP`, then for any constant `d ge 1`, there is no `p`-time approximation algorithm with approximation ratio `d` for general TSP.

Proof. Suppose that for some number `d ge 1`, there was an approximation algorithm `A` for general TSP with the given approximation ratio. Without loss of generality, we can assume `d` is an integer. We will then show how to use `A` to solve instances of HAM-CYCLE. Since HAM-CYCLE is NP- complete, this will imply the result...

Let `G = (V, E)` be an instance of the HAM-CYCLE problem. Let

`G'= (V, E')` be the complete graph on `V`. Assign a cost to

each edge in `E'` as follows:

`c(u,v) = {(1,mbox(if ){u,v} in E),(d cdot |V| + 1,mbox(otherwise.)):}`

This instance `(G', c)` of the TSP optimization problem can be created in p-time in the HAM-CYCLE instance length. If the original graph has a Hamiltonian cycle, then there is a tour following its edges of cost `|V|`. On the other hand, if no such tour exists, then a tour uses at least one edge not in `E`, so has cost `(d cdot |V| +1) + (|V| - 1) > d cdot |V|`. Since our approximation algorithm needs to find a tour within a factor of `d` of the smallest one, if there is a Hamiltonian cycle in `G` when we run `A` the tour output will have cost `le d cdot|V|`. On the other hand, if the graph G does not have a hamiltonian cycle our algorithm on this instance will return a value `> d cdot|V|`.

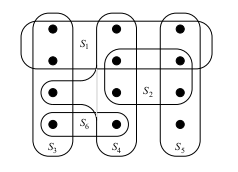

One can give a greedy algorithm for finding a cover by picking the set `S` at each stage that covers the greatest number of remaining elements that are uncovered.

GREEDY-SET-COVER(X, F)

1 U := X

2 C := ∅

3 while U ≠ 0

4 select an S ∈ F that maximizes {S ∩ U}

5 U := U - S

6 C := C ∪ {S}

7 return C

Let `H(d) = sum_(i=1)^d 1/i` denote the `d`th harmonic number, defining `H(0) = 0`.

Theorem. GREEDY-SET-COVER is a polynomial-time `r(n)`-approximation algorithm, where

`r(n) = H(max{|S| : S in F})` on instances `(X,F)` or size `n`.

Proof. GREEDY-SET-COVER deletes at least one `S` from `F` in each iteration and the select step is at most quadratic time, so the algorithm will be polynomial time in the instance size.

To see that GREEDY-SET-COVER is an `r(n)`-approximation algorithm, we assign a cost of `1` to each set selected by the algorithm, distribute this cost over the elements covered for the first time, and then use these costs to derive the desired relationship between the size of an optimal set cover `C^star` and the size the cover `C` returned by the algorithm...

Let `S_i` denote the `i` set selected by GREEDY-SET-COVER. We spread the cost of selecting `S_i`, 1, evenly among the elements

covered for the first time by `S_i`. Let `c_x` denote the cost allocated to element `x in X`. If `x` is covered by `S_i`, then

`c_x = 1/(|S_i - (S_1 cup S_2 cup ... cup S_(i-1))|)`.

At each step of the algorithm, 1 unit of cost is assigned, and so

`|C| = sum_(x in X)c_x`.

The cost of the optimal cover is

`sum_(S in C^star)sum_(x in S)c_x`,

and as each `x in X` is in at least one `S in C^star`, we have

`sum_(S in C^star)sum_(x in S)c_x ge sum_(x in X)c_x = |C|` (**).

We will show the theorem follows from the following claim:

Claim.`sum_(x in X)c_x le H(|S|)` for all `S in F`.

(Proof of Theorem from Claim). From (**) and the claim, we have

`|C| le sum_(S in C^star) H(|S|)`

`le |C^star| cdot H(max{|S| : S in F})`

Consider any `S in F` and `i = 1, ..., |C|`. Let

`u_i = |S - (S_1 cup S_2 cup ... cup S_(i))|`.

We define `u_0 = |S|`. Let `k` be the least index such that `u_k = 0`.

At `k`, each element in S will be covered by at least one of `S_1, ... S_k`.

We have `u_(i-1) ge u_i`, and that `u_(i-1) - u_i` elements of

`S` are covered for the first time by `S_i`. Thus,

`sum_(x in S)c_x = sum_(i = 1)^k(u_(i-1) - u_i) cdot 1/(|S - (S_1 cup S_2 cup ... cup S_(i -1))|)`

Observe that

`|S_i - (S_1 cup S_2 cup ... cup S_(i - 1))| ge |S - (S_1 cup S_2 cup ... cup S_(i - 1))| = u_(i - 1)`

because we chose `S_i` greedily. This gives

`sum_(x in S)c_x le sum_(i = 1)^k(u_(i-1) - u_i) cdot 1/(u_(i-1))`

`= sum_(i=1)^k sum_(j = u_i + 1)^(u_(i-1))1/(u_(i-1))`

`le sum_(i=1)^k sum_(j = u_i + 1)^(u_(i-1)) 1/j` (because of the start condition of sum, `j le u_(i-1)`)

`= sum_(i=1)^k ( sum_(j = 1)^(u_(i-1)) 1/j - sum_(j = 1)^(u_(i)) 1/j)`

`= sum_(i=1)^k (H(u_(i-1)) - H(u_i))`

`= H(u_0) - H(u_k)` (telescoping series)

`= H(u_0) - H(0)`

`= H(u_0)`

`=H(|S|)`, proving the claim.

Theorem. Suppose that the random walk algorithm with `r=2n^2` is applied to any satisfiable instance of 2SAT with `n` variables. Then the probability that a satisfying truth assignment will be discovered is at least `1/2`.

Proof. Let `T` be a truth assignment which satisfies the given 2SAT instance `I`. Let `t(i)` denote the number of expected repetitions of the flip step until a satisfying assignment is found starting from an assignment `T'` which differs in at most `i` positions from `T`. Notice:

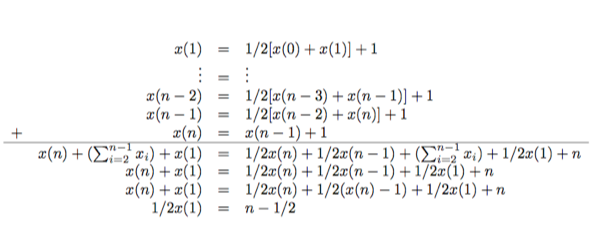

The worst case is the when relation `t` of (3) holds as an equation. `x(0)=0`; `x(n)=x(n-1)+1`; `x(i) = 1/2(x(i-1)+x(i+1))+1`