Randomized Complexity Classes

CS254

Chris Pollett

Apr 24, 2017

CS254

Chris Pollett

Apr 24, 2017

function NewCoin()

{

while (true) {

x = Coin();

y = Coin();

if (x != y) {

if (x == 'H') {

return 'H';

} else {

return 'T';

}

}

}

}

Definition. A probabilistic Turing machine (PTM) is a TM with two transition functions `delta_0`, `delta_1`. To execute a PTM `M` on input `x`, we choose in each step whether to apply `delta_0` or to apply `delta_1`. These two choices give us a sample space and in what follows we always assume the probability distribution on these events gives them each probability 1/2.

The machine only outputs `1` (Accept) or `0` (Reject). We denote by `M(x)` the random variable corresponding to the value `M` writes at the end of the process. For a function `T: NN -> NN`, we say that `M` runs in time `T(n)` if for any input `x`, `M` halts on `x` within `T(|x|)` steps regardless of the choices `M` makes.

Which of the following statements is true?

Claim. For every input `k, a_1, ..., a_n` to FindKthElement, let `T(k, a_1, ..., a_n)` be the expected number of steps the algorithm takes on this input. Let `T(n)` be the maximum of `T(k, a_1, ..., a_n)` over all length `n` inputs. Then `T(n) = O(n)`.

Proof. We can prove by induction that `T(n) < 10cn`. Fix some inputs `k, a_1, ..., a_n`. For every `j in [n]` we choose `x` to be the `j`th smallest of `a_1, ..., a_n` with probability `1/n`, and then we perform either at most `T(j)` steps or `T(n-j)` steps. Thus, we have:

`T(k, a_1, ..., a_n) le cn + 1/n(sum_(j>k)T(j) + sum_(j < k)T(n-j))`

Plugging in our induction hypothesis that `T(j) le 10cj` for `j < n`, gives

`T(k, a_1, ..., a_n) le cn + 10 frac(c)(n)(sum_(j>k) j + sum_(j < k)(n-j)) le cn +10 frac(c)(n)(sum_(j > k) j +kn - sum_(j < k) j)`

Next using `sum_(j > k) j le (n(n-k))/2` and `sum_(j < k)j ge frac(k^2)(2)(1 - o(1)) ge k^2/2.5`, we get

`T(k, a_1, ..., a_n) le cn + 10 frac(c)(n)((n(n-k))/2 +kn - k^2/2.5) = cn + 10 frac(c)(n)(n^2/2 + (kn)/2 - k^2/2.5)`

Considering separately the cases `k < n/2` and `k> n/2` we can see this last equation is always less than

`cn + (10c)/n (9n^2)/10 = 10cn`.

Remark. The decision problem corresponding to the above is given `z, k, a_1, ..., a_n`, is `z` the `k`th smallest element of `a_1, ..., a_n`? The algorithm above always outputs the correct answer, but uses randomness, and its expected running time is `O(n)`. Since you can also show its worst run-time is `O(n^2)` it is a BPP algorithm.

Theorem. Suppose that the random walk algorithm with `r=2n^2` is applied to any satisfiable instance of 2SAT with `n` variables. Then the probability that a satisfying truth assignment will be discovered is at least `1/2`.

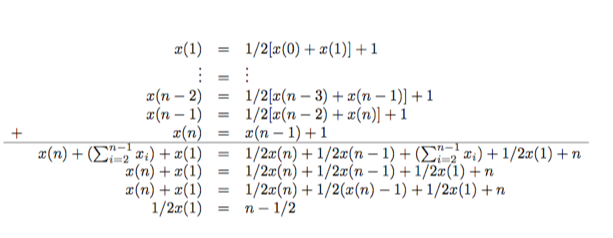

Proof. Let `T` be a truth assignment which satisfies the given 2SAT instance `I`. Let `t(i)` denote the number of expected repetitions of the flip step until a satisfying assignment is found starting from an assignment `T'` which differs in at most `i` positions from `T`. Notice:

The worst case is the when relation `t` of (3) holds as an equation. `x(0)=0`; `x(n)=x(n-1)+1`; `x(i) = 1/2(x(i-1)+x(i+1))+1`