Randomized Algorithms, Error Reduction, Relationships

CS254

Chris Pollett

Nov. 23, 2011

CS254

Chris Pollett

Nov. 23, 2011

Theorem. Suppose that the random walk algorithm with `r=2n^2` is applied to any satisfiable instance of 2SAT with `n` variables. Then the probability that a satisfying truth assignment will be discovered is at least `1/2`.

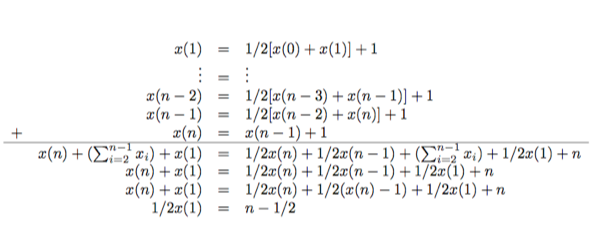

Proof. Let `T` be a truth assignment which satisfies the given 2SAT instance `I`. Let `t(i)` denote the number of expected repetitions of the flip step until a satisfying assignment is found starting from an assignment `T'` which differs in at most `i` positions from `T`. Notice:

The worst case is the when relation `t` of (3) holds as an equation. `x(0)=0`; `x(n)=x(n-1)+1`; `x(i) = 1/2(x(i-1)+x(i+1))+1`

Definition. `RTIME(T(n))` contains every language `L` for which there is a probabilistic TM `M` running in `T(n)` time such that:

`x in L => Pr[M(x) = 1] ge 2/3`

`x !in L => Pr[M(x) = 1] = 0`

We define `RP = cup_c RTIME(n^c)`.

So our 2SAT algorithm was a coRP algorithm. Sometimes BPP-style randomness is called Las Vegas randomness, and RP-style is called Monte-Carlo.

RP has "one-sided error"; one might ask for randomized algorithms that have "zero-sided error":

Definition. The class `ZTIME(T(n))` contains all the languages `L` for which there is a machine `M` that run in an expected-time `O(T(n))` such that for every input `x`, whenever `M` halts on `x`, the output `M(x)` it produces is exactly `L(x)`.

We define `ZPP = cup_c ZTIME(n^c)`.

One can show:

`ZPP = RP cap coRP`

`RP subseteq BPP`

`coRP subseteq BPP`

If t is a positive real number, then

`Pr[X ge (1+c)pn]= Pr[e^(tX) ge e^(t(1+c)pn)]` (*)

By Markov's Inequality,

`Pr[e^(tX) > k cdot E(e^(tX))] le 1/k` for any real `k > 0`.

Taking `k=e^(t(1+c)pn)/[E(e^(tX))]` and using (*) gives

`Pr[X ge (1+c)pn] le [E(e^(tX))]cdot e^(-t(1+c)pn)`. (**)

Since `X= sum_(i=1)^n X_i`, we have `E(e^(tX) )=[E(e^(tX_1))]^n` which in turn equals `(1 + p(e^t-1))^n`.

Substituting this into (**) gives:

`le e^(-t(1+c)pn) cdot e^(pn (e^t-1))`, since `(1+a)^n le e^(an)`.

Take `t=ln(1+c)` to get `Pr[X ge (1+c)pn] le e^(pn(c-(1+c)ln(1+c)))`. Taylor expanding `ln(1+c)` as `c - c^2/2 + ...`

and substituting gives the result. i/e.,

`e^(pn(c-(1+c)ln(1+c))) le e^(pn(c-(1+c)(c- c^2/2 +c^3/3 + ...))) le e^(-(c^2 pn)/2)`

Corollary. If `p=1/2 + epsilon` for some `epsilon > 0`, then the probability that `sum_(i=1)^n X_ i le n/2` is at most `e^(-epsilon^2n/4).

Proof. Take `c = epsilon/(1/2+ epsilon)`. Q.E.D.

The next result shows to a certain degree BPP can be derandomized:

Theorem. `BPP subseteq P``/poly`.

Suppose `L in BPP`, then there exists a TM `M` that on inputs of size `n` uses `m` random bits and such that for every `x in {0,1}^n`, `Pr_r[M(x,r) ne L(x)] le 2^(-n-1)`. Say that a string `r in {0,1}^m` is bad for an input `x in{0,1}^n` if `M(x,r) ne L(x)` and otherwise call `r` good for x. For every `x`, at most `2^m/(2^(n+1))` strings are bad for `x`. Adding over all `x in {0,1}^n`, there are at most `2^n(2^m/(2^(n+1))) = 2^m/2` strings that are bad some some `x`. In particular, there exists a string `r_0 in {0,1}^m` that is good for every `x in {0,1}^n`. We can hardwire such a string `r_0` to obtain a circuit `C` (of size at most quadratic in the running time of `M`) that on input `x` outputs `M(x,r_0)`. The circuit `C` will satisfy `C(x) = L(x)` for every `x in {0,1}^n`.