Nonparametric Learning

CS156

Chris Pollett

May 11, 2015

CS156

Chris Pollett

May 11, 2015

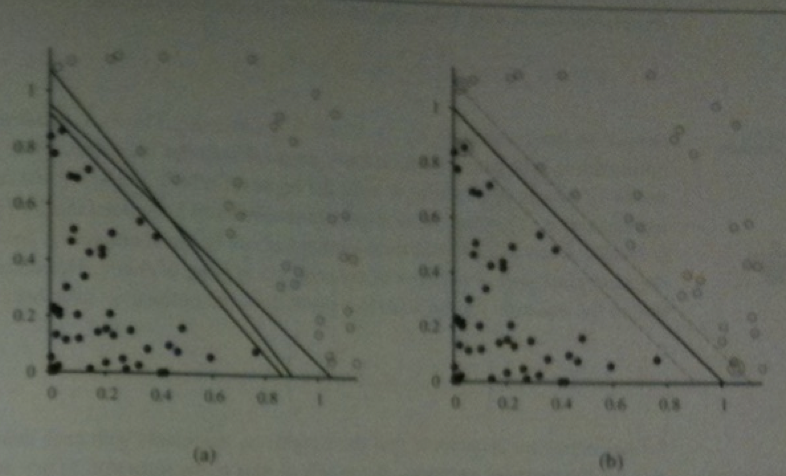

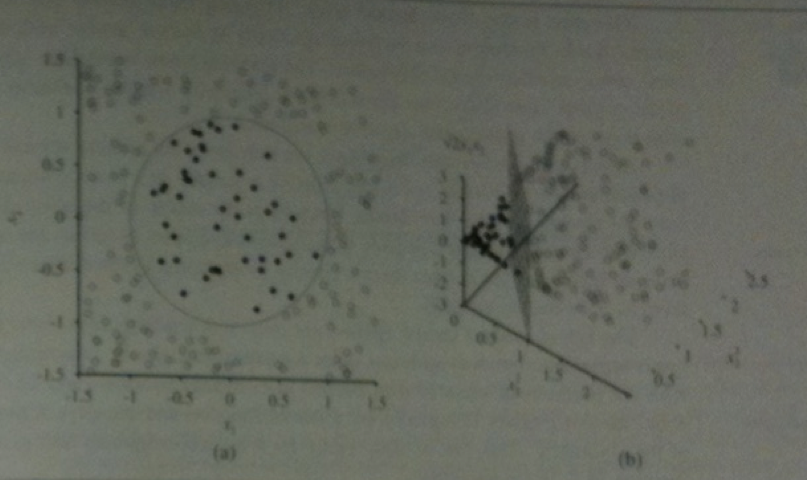

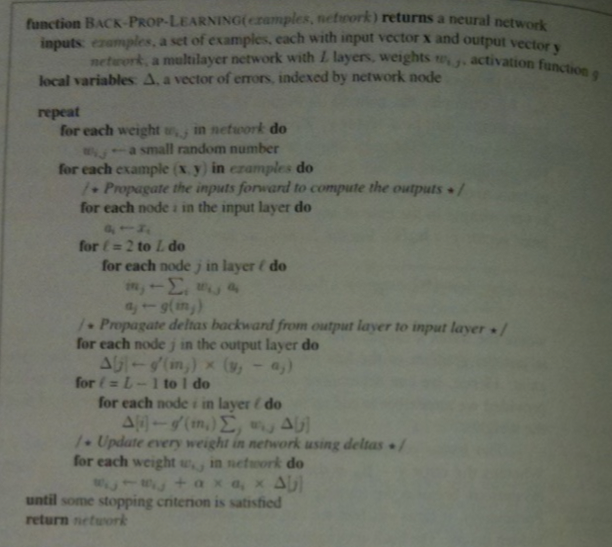

Putting this all together we get the following algorithm:

The book has a graph showing that decision tree learning in the restaurant example is only slightly better than using a feed-forward network.

Which of the following is true?