Multiprocessing

Basic Concepts

Multiprocessing or multitasking is the ability of an operating system to interleave the execution of multiple programs.

Multiprocessor refers to a computer with more than one processor. In effect, a multiprocessing OS creates the illusion of a multiprocessor. Note that a multiprocessor computer can have a multiprocessing operating system. In this case the OS interleaves execution of N programs with M processors.

Implementing multiprocessing is an example of a resource allocation problem. In this case the resource is a bank of M processors. The consumers of this resource are running programs. Each program needs a processor in order to run. The component of the OS that allocates processors to programs is called the scheduler.

Processes

When a user runs a program:

the OS allocates memory segments for the program:

The binary instructions are loaded into the code segment. Global variables are allocated in the static segment. The stack segment will be used to local variables and parameters for active functions. The heap segment will hold dynamic data structures created by the program.

A program that has been loaded into memory is called a process. The OS maintains a record of each process. These records are called process control blocks or PCBs.

Process Control Blocks (PCBs)

The PCB keeps track of

1. the current state of the corresponding process

2. the unique ID number of the corresponding process (PID)

3. the priority of the corresponding process

4. the context of the corresponding process

The context of a process includes all data stored in memory areas that must be shared with other processes. This includes the CPU registers (including the program counter and stack pointer) as well as the page table. (The page table maps virtual addresses generated by the program to physical addresses in main memory. It can be large, 0.5 - 2 Kbytes in size.) The context may also include the starting address and length of each memory segment allocated to the process as well as the handles of all files the process has opened.

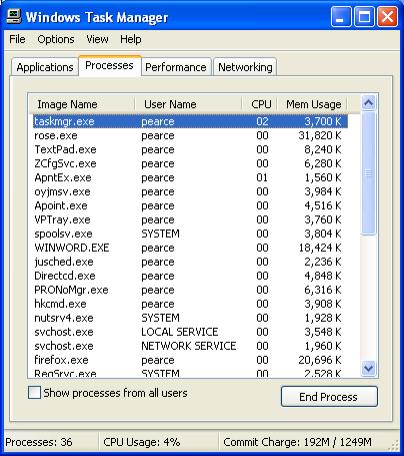

We can use the Windows Task Manager (press Ctrl-Alt-Delete) to see these PCBs:

Here's a Java sketch of a PCB:

class PCB {

// states:

public static final int READY = 0;

public static final int RUNNING = 1;

public static final int BLOCKED = 2;

public static final int TERMINATED = 3;

// priorities:

public static final int HI = 0;

public static final int MEDIUM = 1;

public static final int LOW = 2;

// PID generator:

public static int nextPID = 0;

// this PCB:

int state = 0;

int priority = MEDIUM;

int pid = nextPID++; // process ID

int pc = 0; // program counter

int sp = 0; // stack pointer

int[] registers = new int[32];

Map pageTable = new Hashtable();

public void resume() {

// resume execution at pc

}

}

The Lifecycle of a process

Here's a state diagram showing process/PCB lifecycle (i.e., values of PCB.state and legal transitions):

java.lang.Runtime

Every JRE (Java runtime environment) has a single object that represents it. This object is an instance of the class java.lang.Runtime. Here's how we get our hands on it:

Runtime jre = Runtime.getRuntime();

We can use jre to execute and interact with other programs.

For example, assume the following C++ program has been compiled and linked and lives in a file called Echo.exe:

#include <iostream>

#include <string>

using namespace

std;

int main() {

string input;

while(true) {

cin >> input;

if

(input == "quit") break;

cout << "echo: " +

input + '\n';

}

cout << "echo

quitting\n";

return

0;

}

Our Java program obtains the jre, then uses it to execute Echo.exe:

Runtime jre = Runtime.getRuntime();

Process proc = jre.exec("Echo.exe");

The Runtime.exec() method returns a java.lang.Process object. This object is the JRE's PCB for the Echo.exe process. Among other things, we can obtain the standard input and output streams associated with this process:

BufferedReader fromProc =

new BufferedReader(

new

InputStreamReader(proc.getInputStream()));

PrintWriter toProc =

new PrintWriter(

new BufferedWriter(

new OutputStreamWriter(

proc.getOutputStream())), true);

Thus, fromProc is an input stream in our Java program that represents stdou in Echo.exe, and toProc is an output stream in our Java program that represents stdin in Echo.exe:

Here's the complete code:

import java.io.*;

public class ProcTest {

public static void main(String[] args)

{

try {

Runtime jre =

Runtime.getRuntime();

Process proc =

jre.exec("Echo.exe");

BufferedReader fromProc =

new BufferedReader(

new InputStreamReader(proc.getInputStream()));

PrintWriter toProc =

new PrintWriter(

new BufferedWriter(

new OutputStreamWriter(

proc.getOutputStream())),

true);

toProc.println("Hello");

String result =

fromProc.readLine();

System.out.println("result =

" + result);

toProc.println("Bye");

result = fromProc.readLine();

System.out.println("result =

" + result);

toProc.println("quit");

result = fromProc.readLine();

System.out.println("result =

" + result);

} catch(Exception e) {

System.err.println(e.getMessage());

}

}

}

Here's the output of ProcTest:

result = echo: Hello

result = echo: Bye

result = echo quitting

Java also provides the System class, which provides static fields and methods for accessing properties of the underlying system:

class System {

static PrintStream err, out;

static InputStream in;

static Properties getProperties() { ...

} // get system properties

static long currentTimeMillis() { ... }

// etc.

}

Scheduling

The scheduler is the OS component responsible for allocating and deallocating the CPU. Essentially, the PCBs of all processes in the READY state reside in a ready queue (which might be a priority queue). The scheduler is a privileged process that perpetually:

1. Removes the next process from the ready queue and makes it the

current process (i.e., gives the CPU to it).

2. Waits for the current process to terminate, block, or use up its allocated

CPU time.

3. Takes the CPU away from the current process.

Assume a simple PCB Queue class has been declared:

class Queue extends LinkedList {

public void enQueue(PCB pcb) {

add(size() - 1, pcb);

}

public PCB deQueue() {

if (size() > 0) return

(PCB)remove(0);

return null;

}

}

Here's a Java sketch of a scheduler:

public class Scheduler {

private Queue readyQueue = new Queue();

private int timer = 0;

private PCB currentProc = null;

private void dispatch(PCB pcb) {

// preemption:

if (currentProc.state ==

PCB.RUNNING) {

currentProc.state = PCB.READY;

readyQueue.enQueue(currentProc);

}

// save context of current proc:

// update currentProc.pc

// update currentProc.sp

// update currentProc.registers

// update currentProc.pageTable

// etc.

currentProc = pcb;

// load context of next proc:

// load currentProc.pc

// load currentProc.sp

// load currentProc.registers

// load currentProc.pageTable

// etc.

currentProc.state = PCB.RUNNING;

currentProc.resume();

}

public void run() {

while(true) {

PCB pcb = readyQueue.deQueue();

if (pcb != null) {

dispatch(pcb);

timer = 0;

while(pcb.state == PCB.RUNNING

&& timer++ < 10); // wait

}

}

}

}

Scheduling Algorithms

From the scheduler's point of view, each process is an alternating sequence of CPU and I/O bursts. These bursts are of random durations:

![]()

Generally, short CPU bursts are far more common than long CPU bursts.

A CPU scheduling algorithm attempts to optimize one or more of the following parameters:

CPU utilization (0% - 100%)

Throughput = # procs completed/hour

Turnaround time = length of time for a process P to complete

Waiting time = time process P spends in ready queue

Response time = time for process P to respond to user request

First-Come, First-Served (FCFS)

The simplest scheduling algorithm simply orders the ready queue by arrival time.

Assume the following workload

P1 24 msecs

P2 3 msecs

P3 3 msecs

If these processes arrive in the order P1, P2, P3, then the average wait time will be:

(0 + 24 + 27)/3 = 17 msecs

If these processes arrive in the order P2, P3, P1, then the average wait time will be:

(0 + 3 + 6)/3 = 3 msecs

Shortest Job First (SJF)

In this case the ready queue is a priority queue where the highest priority goes to the process with the shortest next CPU burst time. FCFS is used to break ties.

Assume the following workload:

P1 6 msecs

P2 8 msecs

P3 7 msecs

P4 3 msecs

Then these processes would be scheduled as:

P4, P1, P3, P2

The average wait time will be:

(0 + 3 + 9 + 16)/4 = 7 msecs

The next CPU burst time is predicted as follows. We associate two arrays to each process:

int[] next; // = array of predicted burst times

int[] previous; // = array of actual burst times

Each time the process enters its RUNNING state the time is recorded. When the process exits the RUNNING state, the actual runtime is computed and stored in previous:

previous[n] = currentTime = startTime;

We predict the next burst time using the formula:

next[n + 1] = weight * previous[n] + (1 - weight)* next[n];

The weight constant is between 0 and 1 and determines the influence of previous predictions. For example, If weight = 1, then the predicted next burst is the same as the previous burst.

Priority Scheduling

SJF is a special case or priority scheduling. Instead of using the predicted next burst time to determine the position of the process in the ready queue, we can use the average ration of I/O bursts to CPU bursts, memory usage, or a user defined priority.

Priority scheduling can lead to starvation as a low priority process may never get the CPU. (When they shut down the IBM 7094 at MIT in 1973 they found a low priority process that had been scheduled to run in 1967 but never made it to the front of the ready queue!)

Round-Robin Scheduling (RR)

RR scheduling is FCFS with preemption. If a process doesn't voluntarily give up the CPU after a fixed period of time has elapsed, the OS interrupts the process and places it at the end of the ready queue. The time period is called a time slice and is generally in the range of 10 to 100 milliseconds.

Assume the following workload:

P1 24 msecs

P2 3 msecs

P3 3 msecs

Assume a time slice of 4 milliseconds. Then the schedule looks like:

P1, P2, P3, P1, P1, P1, P1, P1

The average wait time is:

(0 + 4 + 7 + 10)/3 = 7 msecs

Multilevel Queue Scheduling (MQS)

Of course a scheduler can have multiple ready queues:

Queue[] queues = new Queue[5];

where

queues[0] = system processes

queues[1] = interactive processes

queues[2] = batch processes

// etc.

Different scheduling algorithms can be used for different queues, where processes in queues[i] have higher priority than processes in queues[j] when i < j.

Multilevel Feedback Queue Scheduling (MFQS)

In ordinary MQS processes have pre-assigned ready queues. In MFQS I/O-bound processes migrate to higher priority queues, while CPU-bound processes migrate to lower priority queues.